Interpretable machine learning focuses on creating transparent and understandable models‚ ensuring trust and compliance․ Python’s robust libraries like scikit-learn and XGBoost enable building and explaining models effectively․

1․1․ Definition and Importance of Model Interpretability

Model interpretability refers to the ability of a machine learning system to make its decisions and predictions understandable to humans․ It is crucial for building trust‚ ensuring accountability‚ and identifying biases․ Interpretable models enable stakeholders to comprehend how inputs influence outputs‚ fostering transparency․ This is particularly vital in sensitive domains like healthcare and finance‚ where decisions must be explainable․ Techniques like LIME and SHAP are widely used to enhance interpretability‚ making complex models more accessible and reliable․ Python libraries simplify the implementation of these methods․

1․2․ The Need for Transparency in Machine Learning Models

Transparency in machine learning models is essential for ethical decision-making‚ regulatory compliance‚ and user trust․ Without transparency‚ models can operate as “black boxes‚” making it difficult to identify biases or errors․ This lack of accountability can lead to unintended consequences‚ especially in critical areas like healthcare and finance․ Python tools such as LIME and SHAP help demystify model decisions‚ enabling developers to audit and refine their systems effectively․ Transparency ensures that models are not only accurate but also fair and reliable․

Key Concepts and Principles of Interpretable Machine Learning

Interpretable ML emphasizes simplicity‚ explainability‚ and transparency․ Models should align with human understanding‚ enabling clear explanations of decisions․ Python tools like scikit-learn and SHAP facilitate this․

2․1․ Fundamental Principles of Interpretable Models

Interpretable models adhere to principles like simplicity and transparency․ They should align with human understanding‚ enabling users to comprehend decision-making processes․ Python libraries such as scikit-learn and XGBoost provide tools to build and interpret models․ Key aspects include feature importance‚ model explainability‚ and avoiding unnecessary complexity․ These principles ensure trust‚ accountability‚ and regulatory compliance‚ making models accessible to non-experts and fostering collaborative environments between data scientists and domain experts․

2․2․ Trade-offs Between Model Complexity and Interpretability

Complex models often achieve high accuracy but sacrifice interpretability‚ making their decisions opaque․ Simpler models‚ like linear regression‚ are interpretable but may underperform․ Techniques like regularization or feature selection can balance complexity and clarity․ Python tools such as LIME and SHAP help explain complex models‚ enabling a middle ground․ This trade-off requires careful consideration of model goals‚ domain requirements‚ and stakeholder needs‚ ensuring transparency without compromising performance․

Techniques for Building Interpretable Models

Techniques include using linear models‚ decision trees‚ and feature importance methods․ Python libraries like scikit-learn and XGBoost provide tools to simplify and explain complex models effectively․

3․1․ Linear Models and Their Interpretability

Linear models are foundational to interpretable machine learning due to their transparency․ Each coefficient directly represents the impact of a feature on the target variable․ In Python‚ libraries like scikit-learn provide linear regression tools‚ enabling clear interpretation․ The simplicity of these models makes them ideal for applications requiring explainability‚ such as finance and healthcare․ Additionally‚ techniques like SHAP values and LIME can further enhance the understanding of linear model predictions‚ ensuring trust and compliance in critical decision-making processes․

3․2․ Decision Trees as Interpretable Models

Decision trees are highly interpretable due to their hierarchical‚ rule-based structure․ Each node represents a decision‚ and branches show the flow of predictions․ In Python‚ libraries like scikit-learn and XGBoost implement decision trees‚ providing clear visualizations and feature importance scores․ This transparency makes them ideal for applications requiring explainability․ Tree-based models also support techniques like SHAP values‚ enhancing understanding of complex predictions and ensuring trust in critical decision-making scenarios․

3․3․ Feature Importance in Model Interpretation

Feature importance identifies which variables most influence model predictions‚ enhancing understanding and trust․ Techniques like SHAP values and LIME quantify feature contributions․ In Python‚ libraries such as SHAP and scikit-learn provide tools to calculate and visualize these metrics․ These methods help identify key predictors‚ simplify models‚ and ensure transparency in decision-making‚ fostering trust and compliance in machine learning systems․

Model-Agnostic Interpretability Methods

Model-agnostic interpretability methods like LIME and SHAP explain predictions across any model․ These techniques‚ supported by Python libraries‚ enhance transparency and trust in complex systems․

4․1․ LIME (Local Interpretable Model-agnostic Explanations)

LIME explains individual predictions by creating local‚ interpretable models․ It approximates complex models with simple‚ understandable ones‚ like linear regression․ This method ensures transparency and trust‚ making it ideal for compliance․ Python libraries implement LIME effectively‚ enabling users to break down predictions into understandable components․ Its local focus ensures explanations are relevant to specific instances‚ enhancing interpretability without compromising model complexity․ This approach is widely used in scenarios requiring clear‚ actionable insights from complex systems․

4․2․ SHAP (SHapley Additive exPlanations) Values

SHAP values assign feature contributions to predictions based on Shapley values from game theory․ They ensure fair distribution of model output‚ providing clear insights into feature importance․ Python’s SHAP library integrates seamlessly with popular ML frameworks‚ enabling detailed explanations․ SHAP supports various visualization tools‚ making complex models transparent․ Its consistency ensures reliable interpretations‚ fostering trust in model decisions․ This method is particularly effective for understanding black-box models‚ offering a robust solution for model interpretability in diverse applications․

4․3․ Partial Dependence Plots (PDPs) and Individual Conditional Expectation (ICE) Plots

Partial Dependence Plots (PDPs) visualize the relationship between specific features and predicted outcomes‚ averaging across all other variables․ Individual Conditional Expectation (ICE) plots show personalized predictions for each instance‚ revealing nuanced interactions․ Both tools enhance model transparency‚ helping identify feature contributions and non-linear effects․ Python libraries like scikit-learn and matplotlib facilitate their creation‚ enabling data scientists to uncover complex model behaviors and improve interpretability in machine learning applications․

Python Libraries for Interpretable Machine Learning

Python libraries like scikit-learn‚ SHAP‚ and LIME provide essential tools for building and explaining transparent models‚ fostering trust and understanding in machine learning applications․

5․1․ Scikit-learn: Tools for Model Interpretability

Scikit-learn provides robust tools for model interpretability‚ enabling analysts to understand model decisions․ Features like coefficients in linear models and feature importance in tree-based models offer insights․ The library supports techniques such as partial dependence plots and permutation importance for analyzing feature effects․ Integration with libraries like SHAP and LIME enhances explainability․ Scikit-learn’s transparency makes it ideal for building trust in machine learning models‚ balancing accuracy with interpretability for real-world applications․ Its tools are widely used in Python for creating clear‚ interpretable models․

5․2․ XGBoost and SHAP Integration

XGBoost‚ a powerful boosting library‚ integrates seamlessly with SHAP (SHapley Additive exPlanations) to enhance model interpretability․ SHAP values explain how each feature contributes to predictions‚ making complex XGBoost models transparent․ This integration allows analysts to understand feature importance and interactions‚ ensuring trust in model decisions․ By combining XGBoost’s performance with SHAP’s interpretability‚ users can build high-performing yet explainable models‚ leveraging Python’s ecosystem for robust implementations․ This synergy is vital for real-world applications requiring both accuracy and understanding․

5․3․ LIME and Python Implementation

LIME (Local Interpretable Model-agnostic Explanations) is a popular tool for explaining black-box models․ In Python‚ LIME can be easily integrated to provide insights into complex model predictions․ By creating interpretable local models‚ LIME helps users understand how specific features influence predictions․ Its implementation is straightforward‚ requiring minimal code to generate explanations․ This makes LIME a go-to solution for practitioners seeking transparency in machine learning models without compromising performance․ Its flexibility and simplicity have made it widely adopted in the data science community․

Applications of Interpretable Machine Learning

Interpretable machine learning is applied in healthcare‚ finance‚ and education to build transparent predictive models‚ ensuring trust and compliance with domain-specific requirements using Python tools․

6․1․ Healthcare: Transparent Predictive Models

In healthcare‚ interpretable machine learning ensures transparent predictive models for disease diagnosis‚ treatment planning‚ and patient risk assessment․ Using Python libraries like scikit-learn and SHAP‚ models provide clear explanations‚ enabling clinicians to trust and act on predictions․ This approach is critical for patient safety and regulatory compliance‚ making healthcare decisions more accountable and reliable while leveraging advanced machine learning techniques effectively․

6․2․ Finance: Explainable Credit Risk Models

In finance‚ interpretable machine learning is crucial for credit risk assessment‚ ensuring models are transparent and comply with regulations․ Using Python tools like LIME and SHAP‚ financial institutions can explain complex models‚ building trust among stakeholders․ This approach helps in identifying key factors influencing credit decisions‚ reducing errors‚ and ensuring fairness․ Clear explanations are vital for maintaining accountability and meeting regulatory requirements in the financial sector․

6․3․ Education: Interpretable Student Performance Models

Interpretable machine learning enhances education by creating transparent models to predict student performance․ Using Python tools like scikit-learn and LIME‚ educators can identify factors influencing academic outcomes․ These models provide insights into how variables like attendance or prior grades impact performance‚ enabling personalized interventions․ Transparency builds trust among stakeholders‚ ensuring fair and data-driven decision-making․ This approach fosters a better understanding of student needs‚ helping institutions allocate resources effectively to improve learning outcomes․

Challenges and Limitations

Balancing accuracy and simplicity is challenging․ Complex models like neural networks are hard to interpret‚ and high-dimensional data complicates analysis‚ requiring advanced techniques․

7․1․ Balancing Model Accuracy and Interpretability

Striking a balance between model accuracy and interpretability is a significant challenge․ Complex models often achieve high accuracy but lack transparency‚ while simpler models‚ like linear regression‚ are interpretable but may sacrifice performance․ Techniques such as regularization can help simplify models without drastically reducing accuracy․ Additionally‚ Python libraries like LIME and SHAP offer tools to explain complex models‚ bridging the gap between accuracy and interpretability․

7․2․ Handling High-Dimensional Data

High-dimensional data poses a challenge for interpretable machine learning‚ as it often leads to complex‚ opaque models․ Dimensionality reduction techniques‚ such as PCA‚ can simplify data while retaining key features․ In Python‚ libraries like scikit-learn provide robust tools for feature selection and reduction‚ aiding in creating more interpretable models without sacrificing performance․ These methods help ensure that models remain transparent even when dealing with large datasets․

7․3․ Explaining Complex Models like Neural Networks

Neural networks are inherently complex‚ making their interpretability challenging․ Techniques like LIME and SHAP help explain their decisions by analyzing feature contributions․ In Python‚ libraries such as SHAP and Alibi provide tools to break down predictions‚ enabling better understanding of model behavior․ These methods are essential for demystifying deep learning models‚ ensuring transparency and trust in their outcomes while maintaining performance․

Best Practices for Implementing Interpretable Models

Adopting interpretable practices involves simplifying models‚ leveraging domain expertise‚ and regularly auditing for transparency and trust‚ ensuring ethical and reliable AI solutions with tools like SHAP and LIME․

8․1․ Simplifying Models Without Losing Performance

Simplifying models enhances interpretability without compromising accuracy․ Techniques include feature selection‚ regularization‚ and using linear models or decision trees․ Python libraries like scikit-learn offer tools for model simplification‚ ensuring transparency while maintaining performance․ Regularization methods‚ such as Lasso‚ help reduce complexity by eliminating unnecessary features․ Additionally‚ model-agnostic explainability methods like LIME and SHAP provide insights into complex models‚ making them more interpretable․ Balancing simplicity and performance is key to building trust in machine learning systems․

8․2․ Regular Model Auditing and Testing

Regular auditing and testing are crucial for ensuring model reliability and fairness․ Techniques like SHAP values and partial dependence plots help identify biases and evaluate performance․ Python libraries such as scikit-learn and Alibi provide tools for model validation․ Continuous monitoring ensures models remain transparent and accountable‚ addressing ethical concerns․ By implementing these practices‚ organizations can maintain trust and compliance in their machine learning systems‚ fostering responsible AI development and deployment․

8․3․ Using Domain Knowledge for Model Design

Integrating domain expertise into model design enhances interpretability and relevance․ Domain knowledge helps identify meaningful features‚ inform model architectures‚ and guide parameter tuning․ For instance‚ in healthcare‚ clinical insights can shape predictive models; Python tools like scikit-learn and XGBoost support transparent feature engineering․ By aligning models with domain-specific constraints‚ developers ensure outputs are understandable and actionable‚ fostering trust and practical application across industries like finance and education․

Future Directions in Interpretable Machine Learning

Future directions include advancing Explainable AI (XAI)‚ enhancing model robustness‚ and integrating domain knowledge․ Python will play a crucial role in developing scalable‚ interpretable solutions․

9․1․ Advances in Explainable AI (XAI)

Advances in Explainable AI (XAI) focus on developing techniques that make complex models transparent․ Python libraries like SHAP and LIME are driving progress‚ enabling detailed model interpretations․ These tools help uncover feature importance and decision-making processes‚ fostering trust in AI systems․ By integrating XAI with machine learning workflows‚ researchers can build models that are both powerful and understandable‚ ensuring ethical and reliable deployment across industries․

9․2․ Adversarial Robustness and Interpretability

Adversarial robustness and interpretability are critical for reliable AI systems․ As models face adversarial attacks‚ ensuring both robustness and transparency is essential․ Python tools like TensorFlow and Keras support research into defending against such threats while maintaining model explainability․ Techniques such as adversarial training and robust feature engineering are being explored to enhance model resilience without sacrificing interpretability‚ fostering trust in AI decision-making processes across various applications․ This dual focus ensures models are both secure and understandable․

9․3․ Interpretable Models for Deep Learning

Deep learning models‚ though powerful‚ are often complex and challenging to interpret․ Techniques like layer-wise relevance propagation‚ attention mechanisms‚ and SHAP values‚ along with Python libraries such as TensorFlow and Keras‚ facilitate model transparency․ These methods enable practitioners to understand model decisions‚ fostering trust and improving model performance in applications like image and natural language processing․

Interpretable machine learning with Python enhances model transparency‚ fostering trust and accountability․ Tools like SHAP and LIME simplify complex models‚ making insights accessible for informed decision-making․

10․1․ Recap of Key Concepts

Interpretable machine learning emphasizes transparency and trust in model decisions․ Key concepts include using Python libraries like scikit-learn and XGBoost for model interpretability․ Techniques such as SHAP values and LIME provide insights into feature importance and predictions․ Applications span healthcare‚ finance‚ and education‚ while challenges involve balancing accuracy with simplicity․ Regular auditing and domain knowledge integration are best practices․ These methods ensure models are both performant and understandable‚ fostering confidence in their deployment and outcomes across industries․

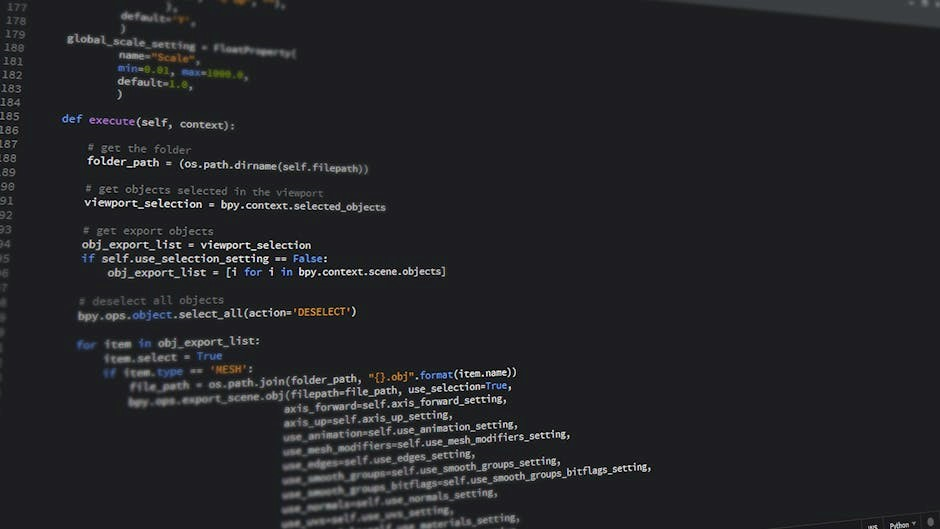

10․2․ The Role of Python in Interpretable Machine Learning

Python plays a pivotal role in interpretable machine learning through its extensive libraries like scikit-learn‚ XGBoost‚ and SHAP․ These tools enable model transparency‚ feature importance analysis‚ and explainability․ Python’s flexibility and vast ecosystem simplify the integration of interpretable techniques into workflows․ Libraries like LIME and Alibi further enhance model interpretability‚ making Python a cornerstone for building trustworthy and understandable models․ Its accessibility and robustness have solidified Python’s position as a leading language for advancing interpretable machine learning practices․